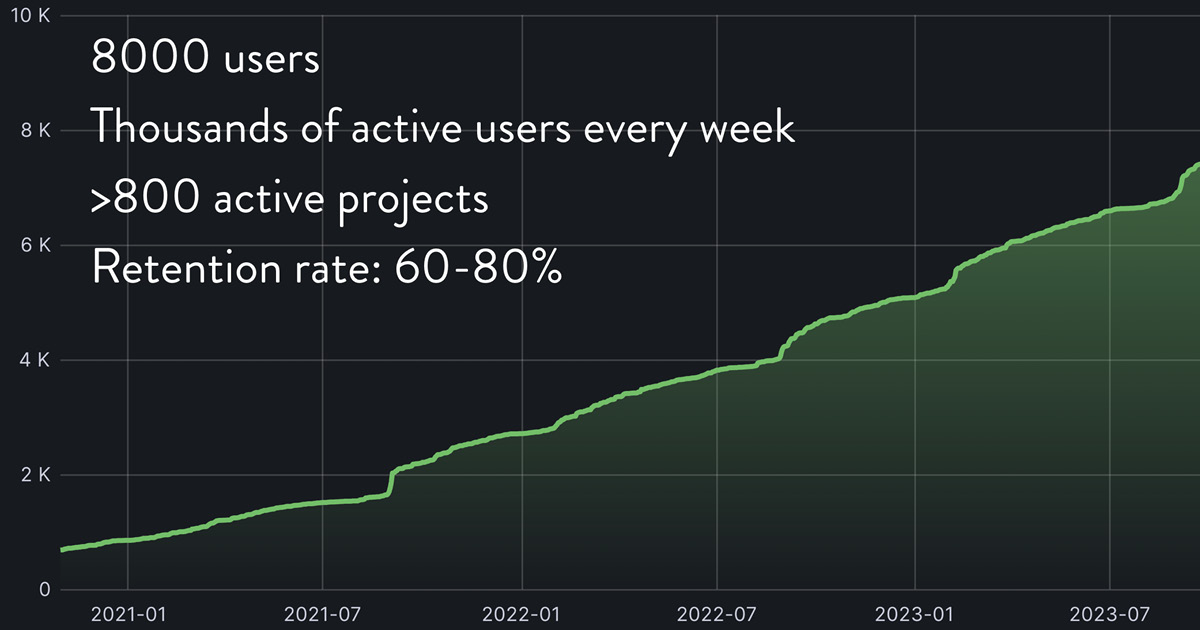

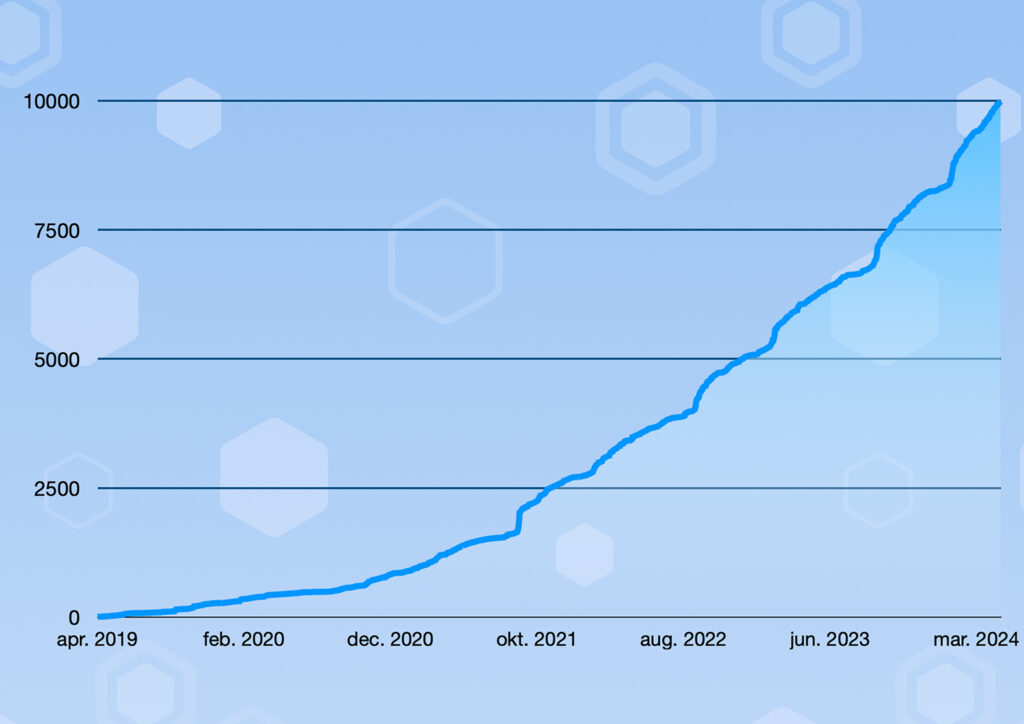

With 10,000 users, DeiC Interactive HPC has established itself as one of Europe’s most popular HPC facilities, thanks to an unprecedented democratisation of access to advanced computing resources. These resources, once reserved for specialised research fields and technically adept specialists, are now accessible to any researcher with a dataset and a vision.

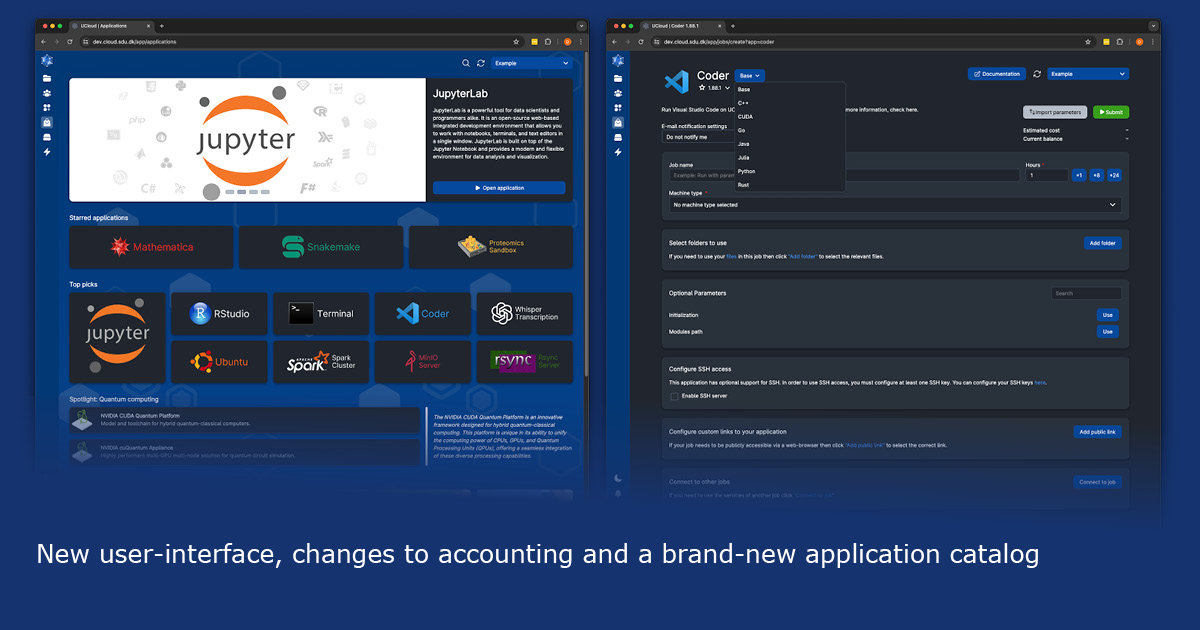

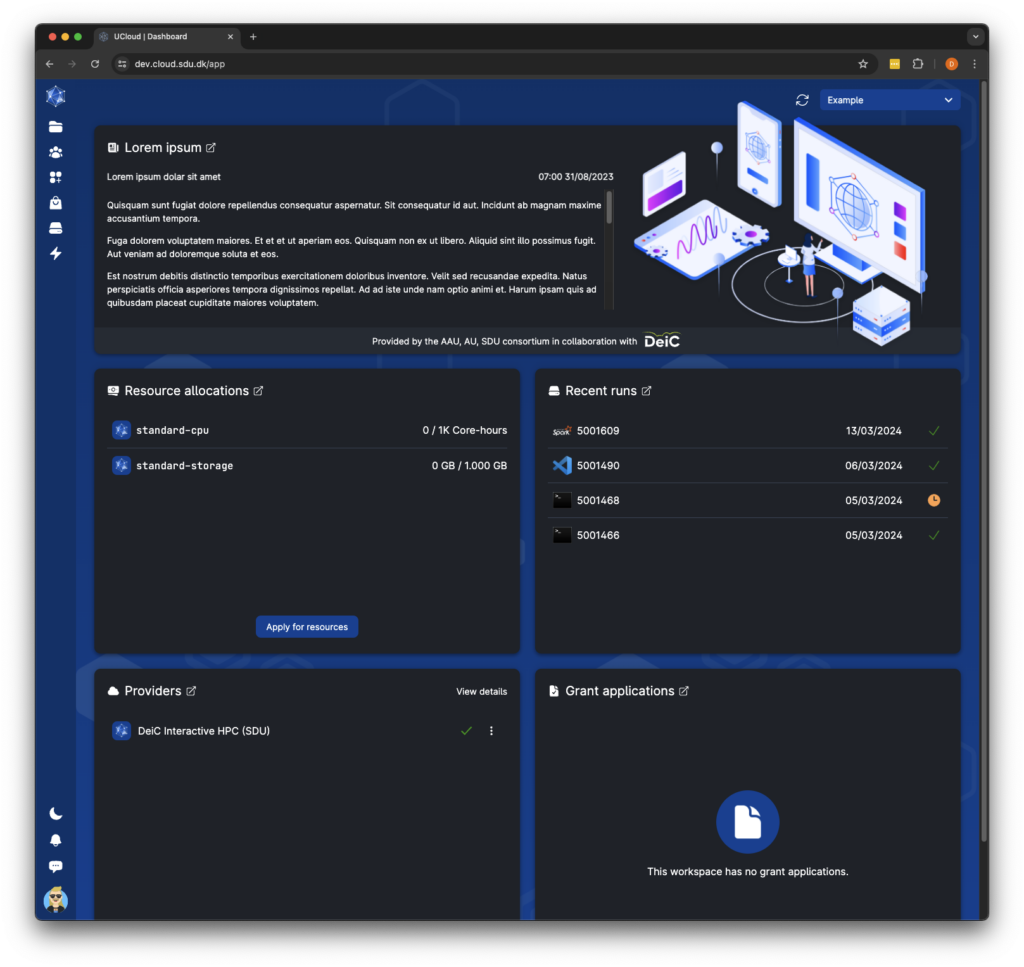

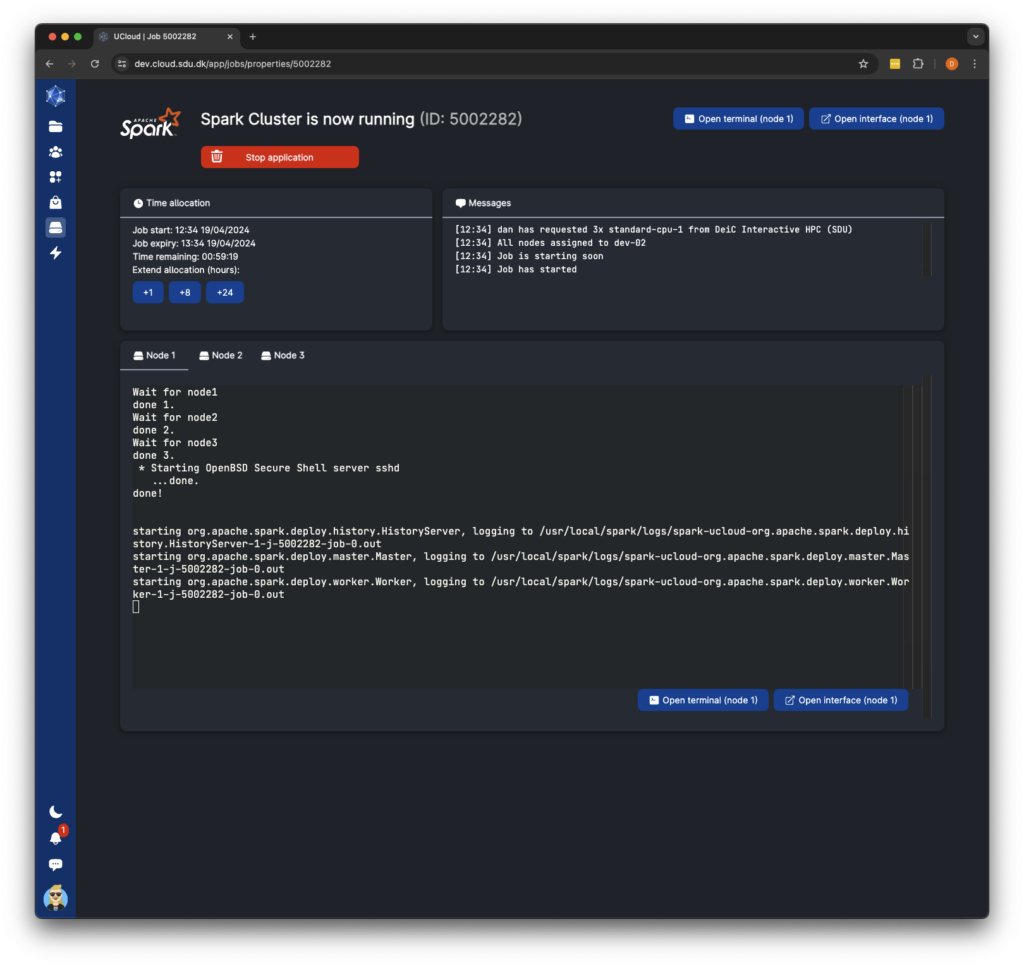

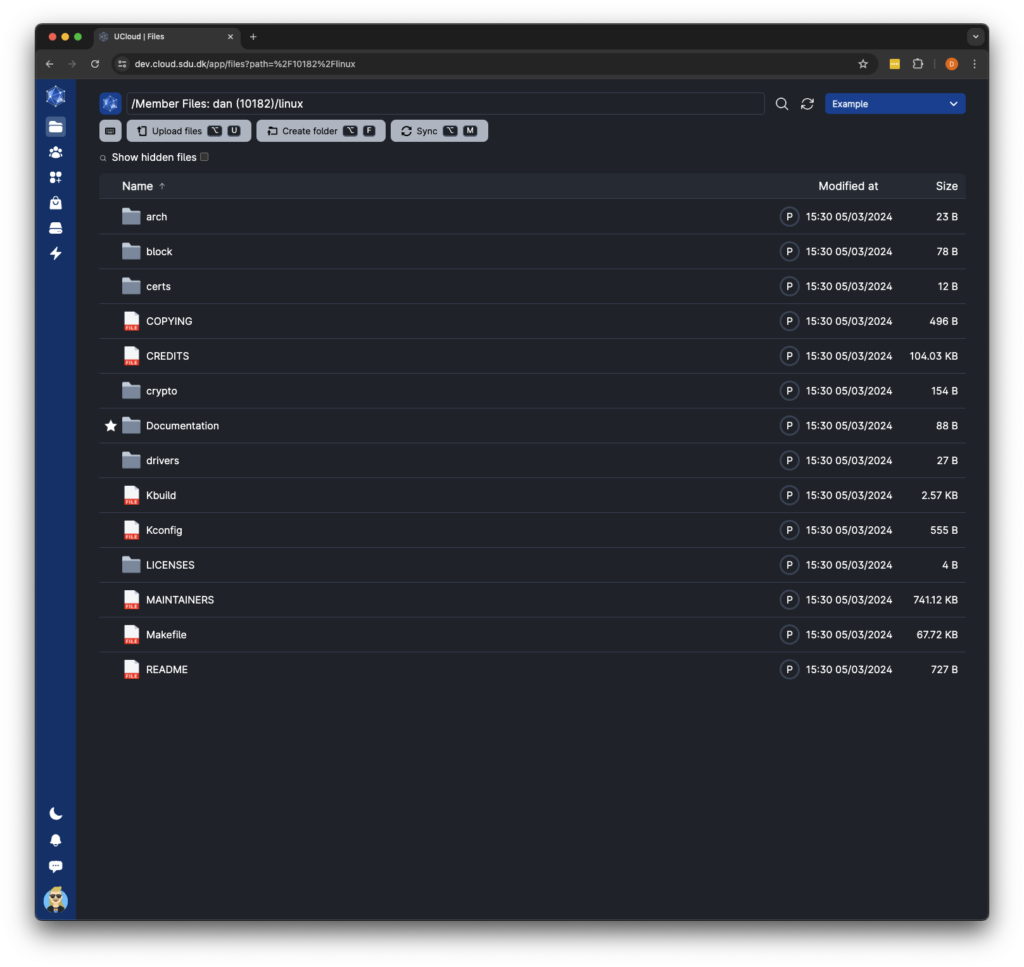

Through a newly developed, simple, and graphical user interface, DeiC Interactive HPC, also known as UCloud, makes it easier than ever to gain interactive access to supercomputing. This approach reduces technical barriers and enhances research collaboration by offering shared, easily accessible virtual environments. As a result, DeiC Interactive HPC supports dynamic and interdisciplinary research, accelerating research processes and promoting innovation in fields ranging from bioinformatics to digital humanities.

Democratising Access to HPC

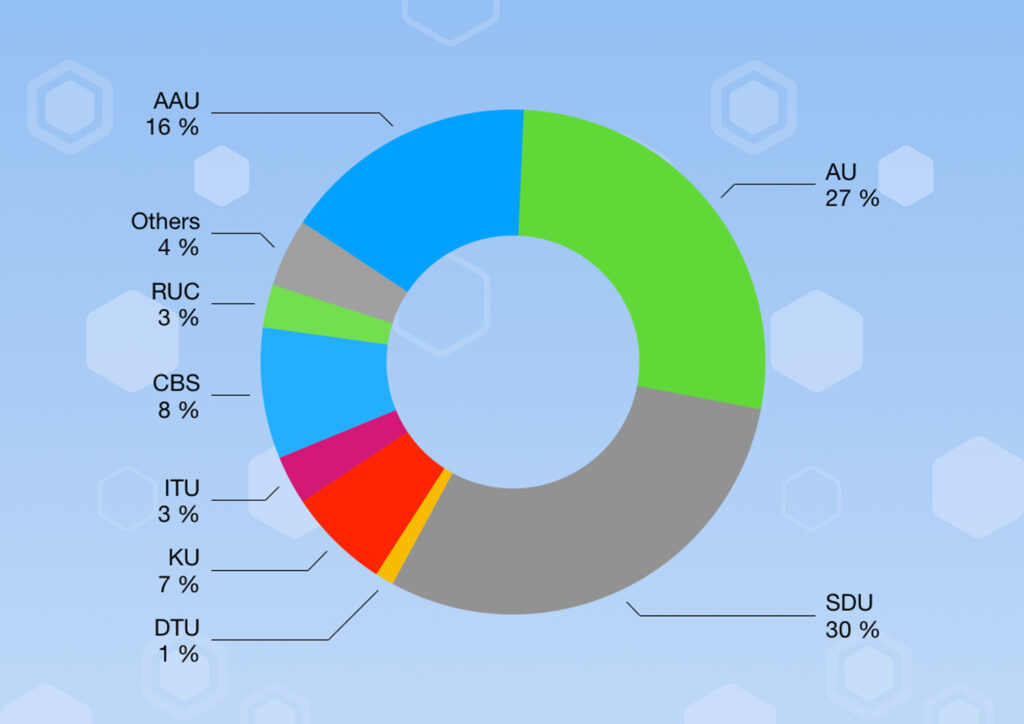

The trend towards more interactive use of technology, including HPC, reflects efforts to make the STEM field more inclusive and accessible, mirroring broader societal changes towards diversity and inclusion in technology and science. DeiC Interactive HPC’s user-friendly approach has attracted a broad spectrum of users, including those from nearly all Danish universities and individuals with varying levels of technical expertise, notably many students.

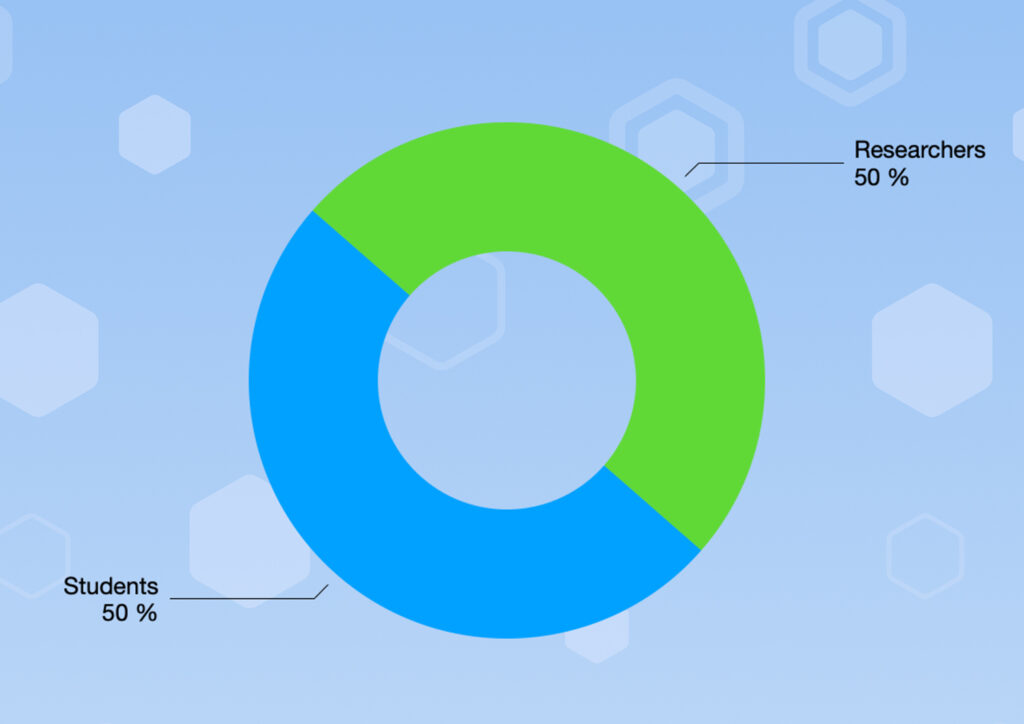

“We are proud to highlight the growing diversity among DeiC Interactive HPC users, a development that further distinguishes DeiC Interactive HPC from traditional HPC systems. We see continuous growth in user numbers and are now celebrating surpassing 10,000 users across a very broad spectrum of research disciplines, which is impressive in the HPC field. Of these users, 50% are students, reflecting DeiC Interactive HPC’s success in attracting new users and serving as a bridge to larger European HPC facilities,” says Professor Kristoffer Nielbo, representing Aarhus University in the DeiC Interactive HPC Consortium.

By simplifying access to supercomputers, DeiC Interactive HPC democratises powerful data processing resources, enabling a wider range of researchers and academics to conduct innovative research without the steep learning curve traditionally associated with HPC. This inclusivity fosters scientific collaboration and creativity, enriching the HPC community with a diversity of perspectives and ideas.

“We continuously work to improve DeiC Interactive HPC with a democratic approach, using user feedback to ensure our focus is in the right place. This is also reflected in our new update – UCloud version 2 – which aims to increase efficiency and improve the user experience for researchers. It is part of our DNA as an interactive HPC facility to always keep the user in mind and develop apps and user interfaces based on user needs. Therefore, we encourage our users to reach out to us with their wishes and ideas,” says Professor Claudio Pica, representing the University of Southern Denmark in the DeiC Interactive HPC Consortium.

Did You Know

– that DeiC Interactive HPC provides resources for developing large AI language models through initiatives such as Danish Foundation Models (DFM) and the new Danish Language Model Consortium?

– that nearly 80 apps are available via UCloud’s App Store, including the Chat-GPT-like app, Chat-UI, and transcription tools Transcriber and Whisper Transcription?

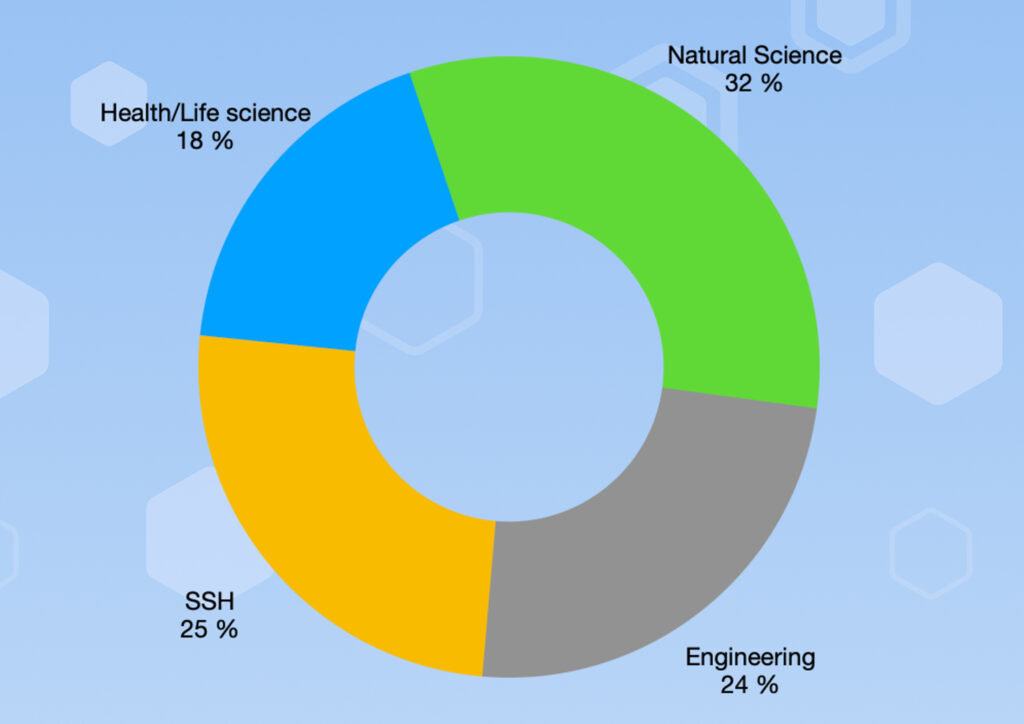

– that 25% of users on Interactive HPC come from the Social Sciences & Humanities, a unique figure as HPC traditionally caters almost exclusively to Natural Science, Health/Life Science, and Engineering?

An All Danish and Highly Secure System

Despite its internationally sounding name, UCloud, DeiC Interactive HPC is part of the Danish HPC landscape, funded by Danish universities and the Ministry of Education and Research. The increased focus on developing a new generation of highly user-friendly applications means that researchers and other university staff can now use intuitive applications for transcribing sensitive data via DeiC Interactive HPC.

“DeiC Interactive HPC has already developed applications based on the same transcription technology found online and made them available in a secure environment through the UCloud platform. These transcription applications are just the beginning of a series of targeted secure applications that do not require prior experience, and we are always open to user input and ideas that arise from their unique needs but often prove beneficial to many,” says Lars Sørensen, Head of Digitalisation, representing Aalborg University and CLAAUDIA in the DeiC Interactive HPC Consortium.

By making advanced data processing more accessible to researchers from various disciplines, DeiC Interactive HPC helps break down the technical barriers that previously limited access to these resources. With an increasing number of students and new users from diverse backgrounds combined with continuous engagement in user-centred innovation, DeiC Interactive HPC not only supports the academic community but also plays a crucial role in promoting a more inclusive and productive research environment.

For further information and high resolution graphics, contact:

Kristoffer Nielbo, Director of Center for Humanities Computing, Aarhus University, 26832608 kln@cas.au.dk

About DeiC Interactive HPC (UCloud)

UCloud offers access to advanced tools such as quantum simulation apps and H100 GPUs as well as applications aimed at data analysis and visualisation.

In data analysis, Python and Jupyter notebooks are particularly prominent, catering to the interactive, ad hoc, and data-centric workflows common in the field. These tools are highly valued for their user-friendliness in handling rapidly changing software environments and offer rich user interfaces, a significant advantage compared to traditional HPC setups, which can be more complex or less flexible.

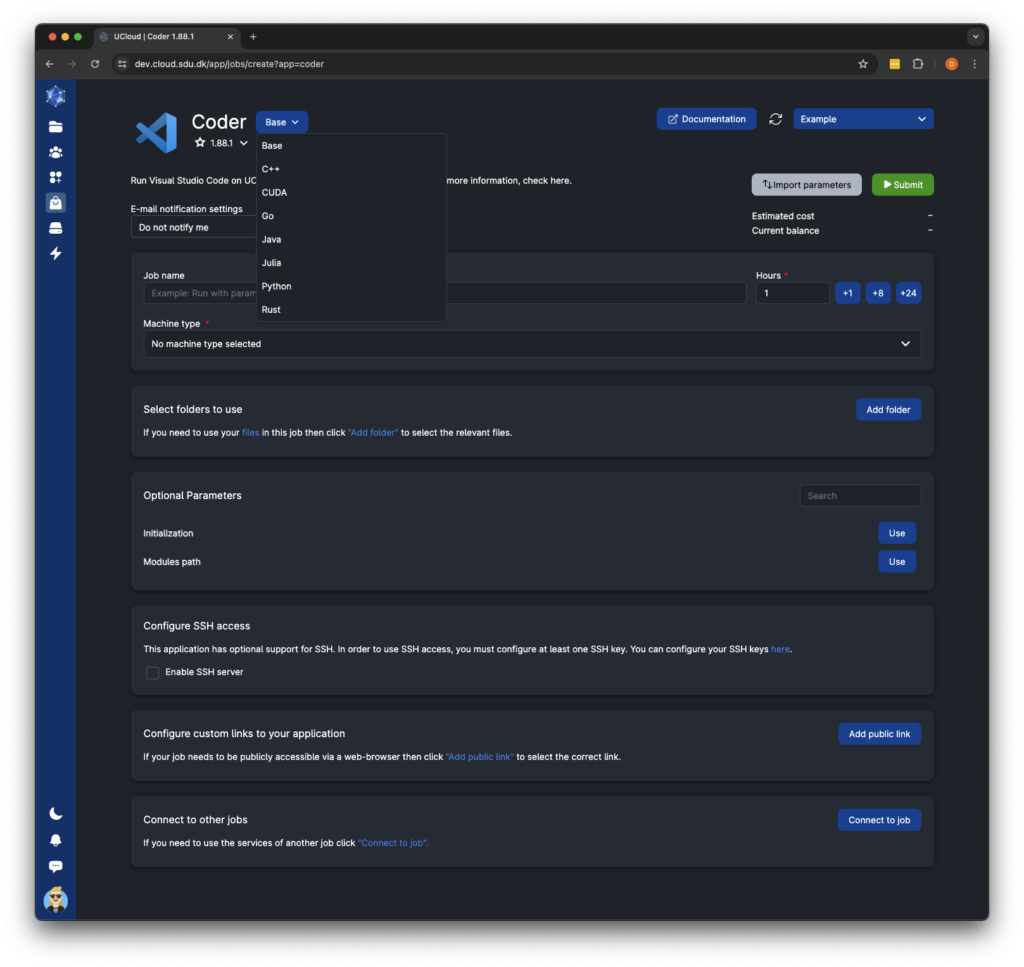

Furthermore, the integration of tools such as Conda for managing software packages, Jupyter notebooks, Rstudio, Coder, and Dask for parallel computing significantly enhances the usability of HPC resources for interactive and on-demand data processing needs. These tools help bridge the gap between the hardware of complex HPC systems and the user-friendly software environments that data scientists require.

Links

About the Consortium

DeiC Interactive HPC (UCloud) is a successful collaboration between three universities: SDU, AU, and AAU.

Aalborg University, CLAAUDIA, represented by Lars Sørensen

SDU, eScience Center, represented by Professor Claudio Pica

Aarhus University, Center for Humanities Computing, represented by Professor Kristoffer Nielbo