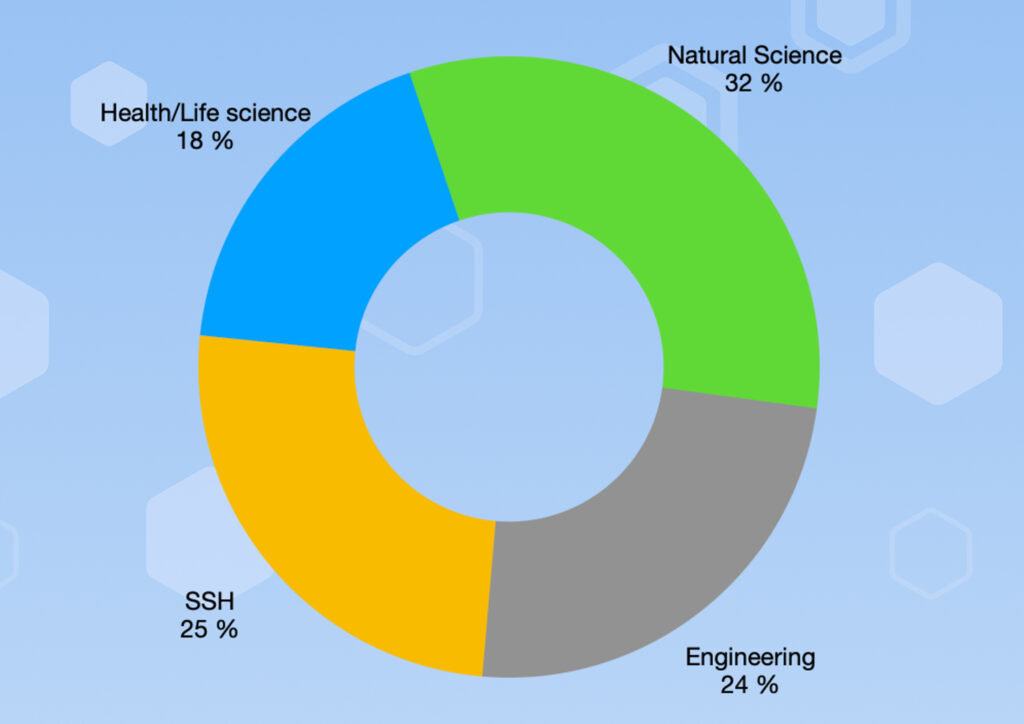

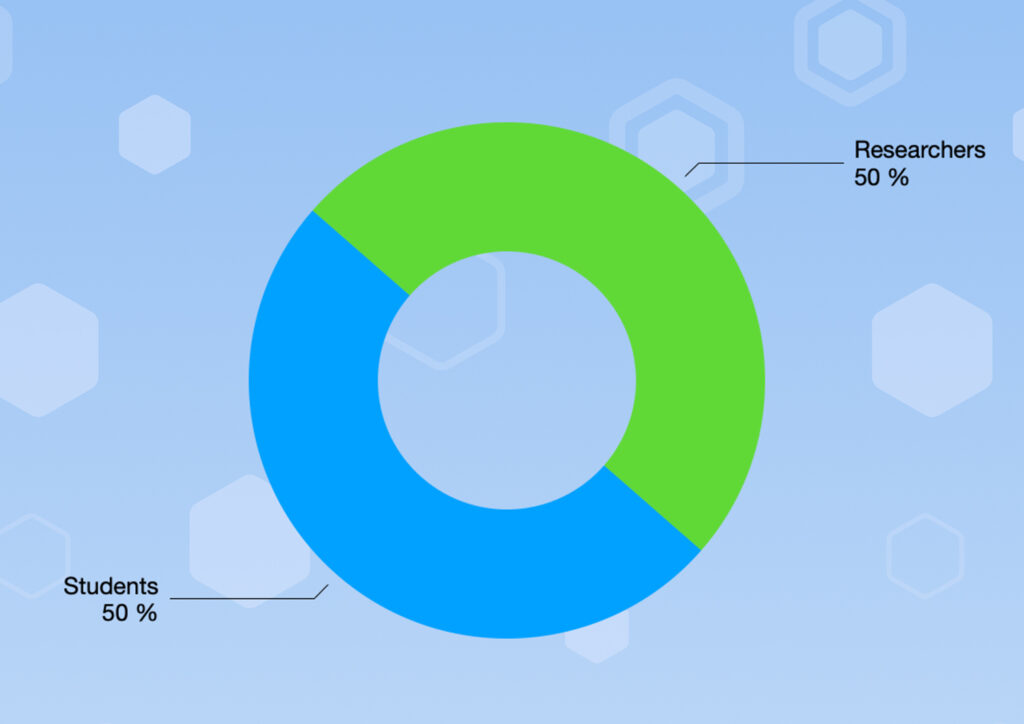

Supercomputing has long been associated with areas such as physics, engineering, and data science. However, researchers in humanities at Aarhus University are increasingly turning to supercomputing allowing them to delve into unexplored territories and discover new insights.

From analysing historical archives to simulating ancient civilizations to analysing social media data, supercomputing offers unique opportunities to generate insights and advance knowledge in humanities.

In this article series, we highlight three cases with humanities researchers from Aarhus University that illustrate the varied ways in which supercomputing is being used in humanities research.

Iza Romanowska is assistant professor at Aarhus University working at the Aarhus Insitute of Advanced Studies where she studies complex ancient societies.

To overcome the challenges of limited data from these ancient societies, researchers have started utilizing Agent-based model (ABM) sometimes enabled by supercomputing. ABMs are computational models that simulate the behaviour and interactions of individual entities, known as agents, within a specified environment or system. Each agent in the model is typically programmed with a set of rules or algorithms that control its behaviour, decision-making processes, and interactions with other agents and the environment.

ABM is a valuable tool in archaeology that allows us to simulate and analyse the behaviours and interactions of individuals or groups in past societies, and the use of ABM allows comparison of the model against real archaeological data.

Assistant Professor Iza Romanowska

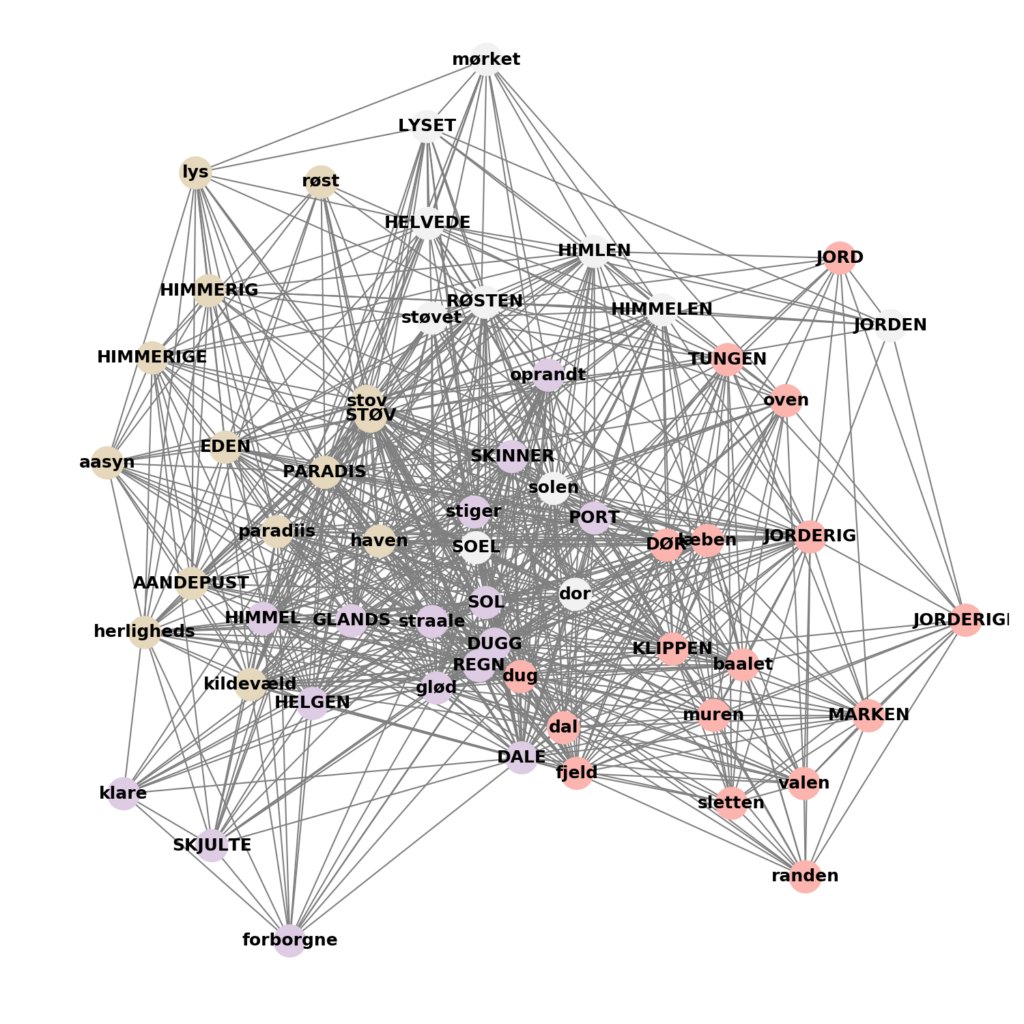

In one of Iza Romanowska’s studies, agent-based modelling (ABM) made it possible for her and her colleagues to explore the Roman economy in the context of long-distance trade, using ceramic tableware to understand the distribution patterns and buying strategies of traders in the Eastern Mediterranean between 200 BC and AD 300.

The potential of supercomputing in humanities becomes particularly evident when studying such societies with only limited data as experienced by archaeologists and historians. Iza Romanowska explains that the availability of data is limited in her field compared to other disciplines, stating that while social scientists studying more contemporary populations have access to abundant amounts of data such as the number of traders, transactions, and values, “we have none of this information.” Therefore, the use of HPC has been essential for her research.

ABM as methodological tool necessitates running the simulation many times, and by many, I mean eight hundred thousand times, and that is possible with a laptop… if one plans to be doing their Ph.D. for 500 years. Supercomputing is bigger, faster, better without any qualitative change in terms of the research.

Assistant Professor Iza Romanowska

Using a high-performance computer like the DeiC Interactive HPC system enhances the scalability and speed of ABMs, allowing researchers to gain deeper insights into the behavior and outcomes of complex systems. The DeiC Interactive HPC facility hosts out-of-the-box tools, like NetLogo, for working with ABM. Researchers can also use ABM frameworks for Python or R in one of the many development apps like JupyterLab or Coder.

Supercomputing and coding as research tools advance humanities research

While humanities data in general is plentiful and can be analysed effectively, Iza Romanowska finds that there is a gap in understanding the underlying processes that generate the observed patterns, resulting in underdeveloped explanatory frameworks. Her point is that the lack of formal tools for theory building and testing remains a major disciplinary issue.

“Within humanities including archaeology and history, data analysis is well-established. However, there’s a kind of fundamental disciplinary problem with that we don’t have or use many computational tools for theory building and theory testing. Supercomputing as a tool for the humanities can contribute to fill this gap and strengthen theory building and ultimately it can advance the field of humanities research.”

Assistant Professor Iza Romanowsk

Iza Romanowska believes that more people in humanities should learn to code to take advantage of the possibilities offered by their data. She suggests that supercomputing can be a natural progression from this. While many humanities researchers may not feel like they need supercomputing, perhaps they are simply not asking questions that could benefit from high-performance computing (HPC).

I would especially encourage junior researchers in the humanities to embrace supercomputing. It never hurts to acquire a skill, and many of these tools are becoming so easily available that it’s almost a shame to not use them.

You have just read the second of three cases in our series on Interactive HPC usage in humanities.

Through these compelling cases it becomes evident that supercomputing in humanities research is transforming traditional approaches, empowering researchers to uncover new insights and deepen our understanding of the field. It opens doors to interdisciplinary collaborations and expands the possibilities for data analysis and modelling, ultimately shaping the future of digital humanities.

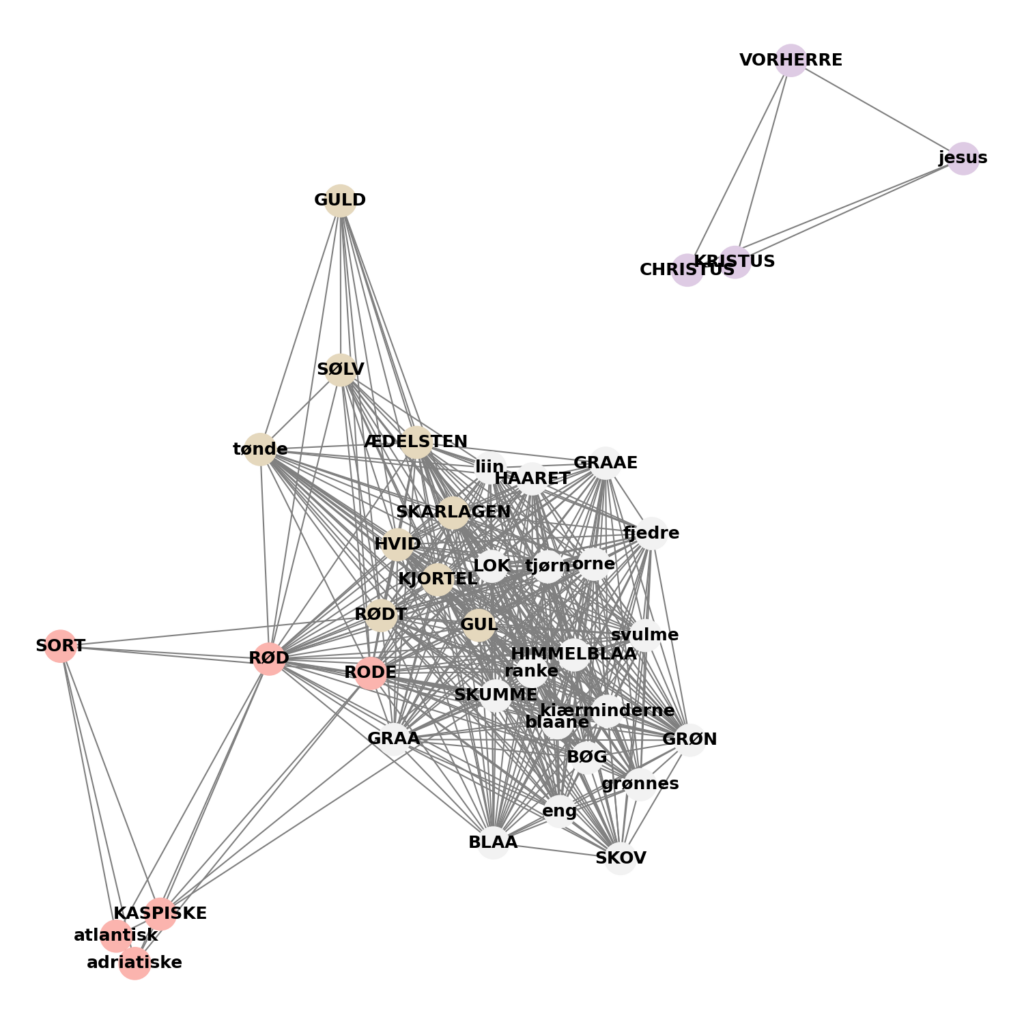

Stay tuned for our third case featuring Rebekah Baglini representing her field of linguistics and check out the first case featuring Katrine Frøkjær Baunvig and the case of creating a Grundtvig-artificial intelligence using HPC