Supercomputing has long been associated with areas such as physics, engineering, and data science. However, researchers in humanities at Aarhus University are increasingly turning to supercomputing allowing them to delve into unexplored territories and discover new insights.

From analysing historical archives to simulating ancient civilizations to analysing social media data, supercomputing offers unique opportunities to generate insights and advance knowledge in humanities.

In this article series, we highlight three cases with humanities researchers from Aarhus University that illustrate the varied ways in which supercomputing is being used in humanities research.

While many studies are based on historical data, the research of Rebekah Baglini, Associate Professor in Linguistics at Interacting Minds Centre, Aarhus University is an excellent example of supercomputing applied to recent data in the humanities.

She employs supercomputing in her current projects involving the collection, processing, and annotation of large-scale media data from traditional and social media sources. By examining this diverse range of data, Rebekah Baglini investigates causal inference and causal reasoning from a linguistic perspective. Her research involves the application of semantic model theory and computational methods to uncover insights in linguistics.

“I aim to develop computationally assisted methods to identify trends in the discursive and informational landscape around topics concerning media dynamics, public health and science communication, crisis and risk messaging, as well as the emergence of mis- and dis-information”.

Rebekah Baglini, Associate Professor in Linguistics, Aarhus University

In addition to her linguistic investigations, Rebekah Baglini also strives to enhance the existing computational language models for multilingual natural language processing (NLP), with a particular focus on under-resourced languages.

Humanities researchers should know the affordances of High-Performance Computing

Rebekah’s pursuits demonstrate the continuous progress of digital humanities and the ongoing efforts to enhance existing language models, ultimately leading to a deeper understanding in the field of humanities.

“My earlier work involved smaller language corpora and didn’t require HPC resources. However, as my projects grew in scale, involving large corpus creation, the relevance of supercomputing increased. I recognise that not all projects require HPC. However, it is useful for researchers to gain training in the affordances of HPC, parallel compute, and large models so they know what’s possible, and can potentially take on projects of larger scale or make use of state-of-the-art resources for data processing, modelling, and simulation.”

Rebekah Baglini, Associate Professor in Linguistics, Aarhus University

This explains why NLP and Computational Linguistics have become integral to Rebekah Baglini’s teaching, enabling her to offer students practical exposure to working with extensive datasets and large language models, fostering hands-on learning opportunities. She emphasises that there is a significant learning curve when delving into the realm of supercomputing.

“There has definitely been a learning curve involved in the transition from locally maintained clusters to the cloud based Interactive HPC platform, particularly because it is also a somewhat new service without comprehensive documentation, and my affiliation with Center for Humanities Computing at Aarhus University has been a valuable resource as there is a great deal of collective experience and knowledge to draw on in the community”.

Rebekah Baglini, Associate Professor in Linguistics, Aarhus University

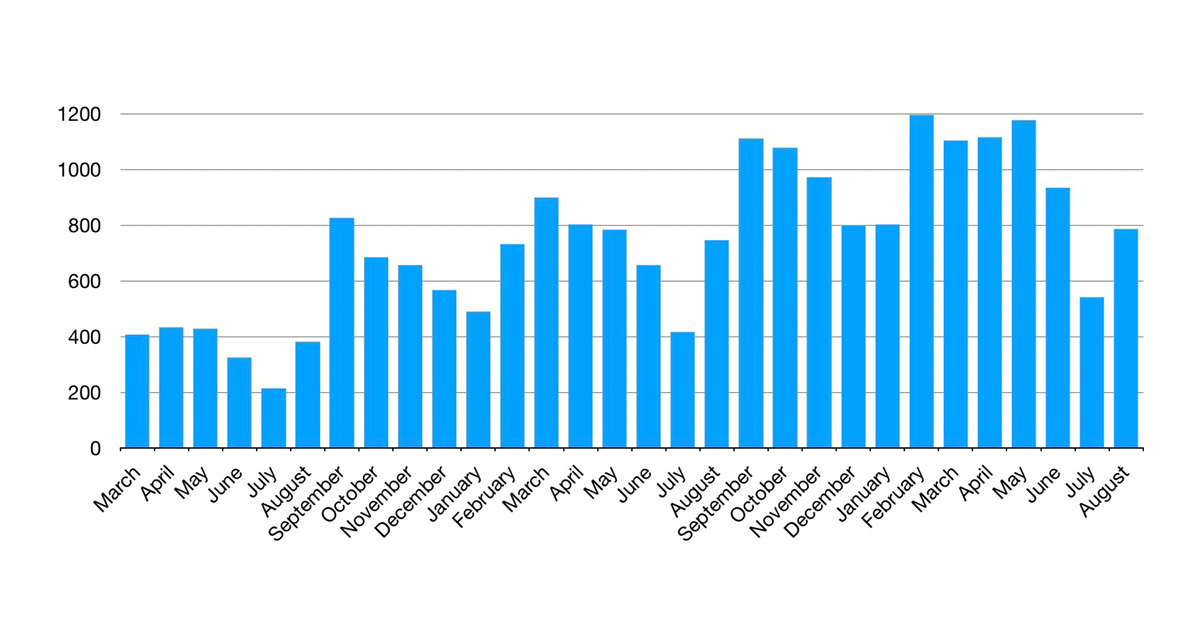

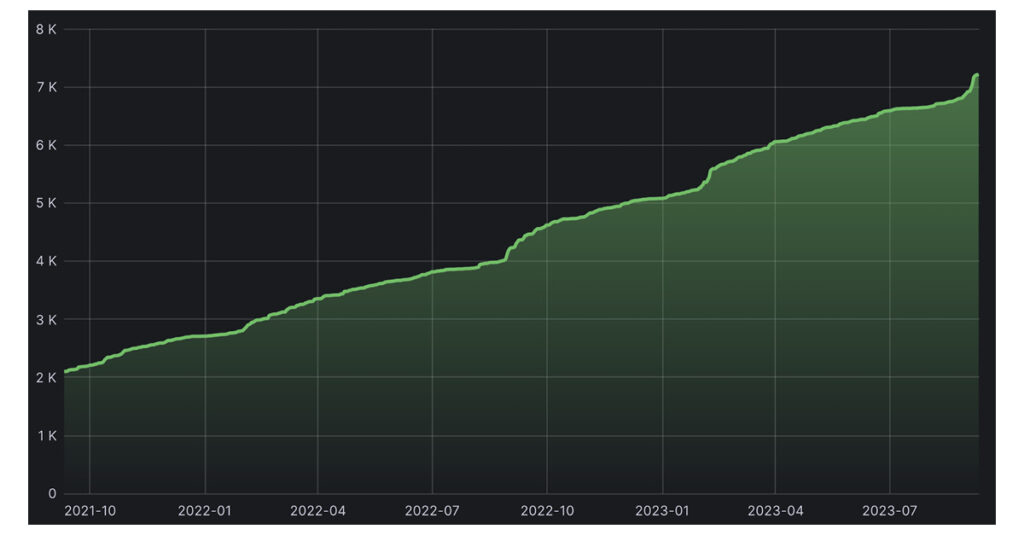

Rebekah has used the DeiC Interactive HPC system for storing and analysing news and social media in the national research project HOPE that monitored Scandinavian user behaviour during Covid-19.

Today she uses the system in her own AUFF Starting Grant Project CROSS: Causal Reasoning and Online Science Scepticism to train language models to identify and analyse emerging narratives that undermine or counteract verified messaging on scientific findings and public health recommendations.

You have just read the third and final case in our series on Interactive HPC usage in humanities.

Through these compelling cases it becomes evident that supercomputing in humanities research is transforming traditional approaches, empowering researchers to uncover new insights and deepen our understanding of the field. It opens doors to interdisciplinary collaborations and expands the possibilities for data analysis and modelling, ultimately shaping the future of digital humanities.

Check out the other two cases featuring Katrine Frøkjær Baunvig and the case of creating a Grundtvig-artificial intelligence using HPC and Iza Romanowska and the case of Utilizing agent-based models in archaeological data.